User friendly LLaMa 3.2 Multimodal Web UI using Ollama

LLaMa 3.2 Multimodal Web UI is user-friendly interface for interacting with the Ollama platform’s LLaMa 3.2 multimodal model. It effortlessly supports both text and image inputs, allowing users to ask questions, submit prompts, and receive responses in text, code, and even visual outputs, making the power of multimodal AI accessible to all.

This code is tested on Ubuntu.

Features

- Multimodal Input Support: Submit text and image inputs to receive context-aware responses.

- Formatted Responses: Highlighted code blocks and detailed explanations for code outputs.

- Copy-to-Clipboard: Easily copy code snippets directly from the UI for seamless integration into your projects.

- Responsive UI: Optimized for both desktop and mobile devices.

- Interactive Output: View text, images, and other media types generated by Llama 3.2.

Installation

To run CodeLlama Multimodal Web UI locally, follow these steps:

-

Clone the repository:

git clone https://github.com/iamgmujtaba/llama3.2-webUI -

Navigate to the project directory:

cd llama3.2-webUI - Ensure Ollama are installed on your machine:

- Install Ollama here

-

Run the application using the included shell script:

bash run.shThis will start the local development server and automatically open the application in your default web browser at

http://localhost:8000.

Usage

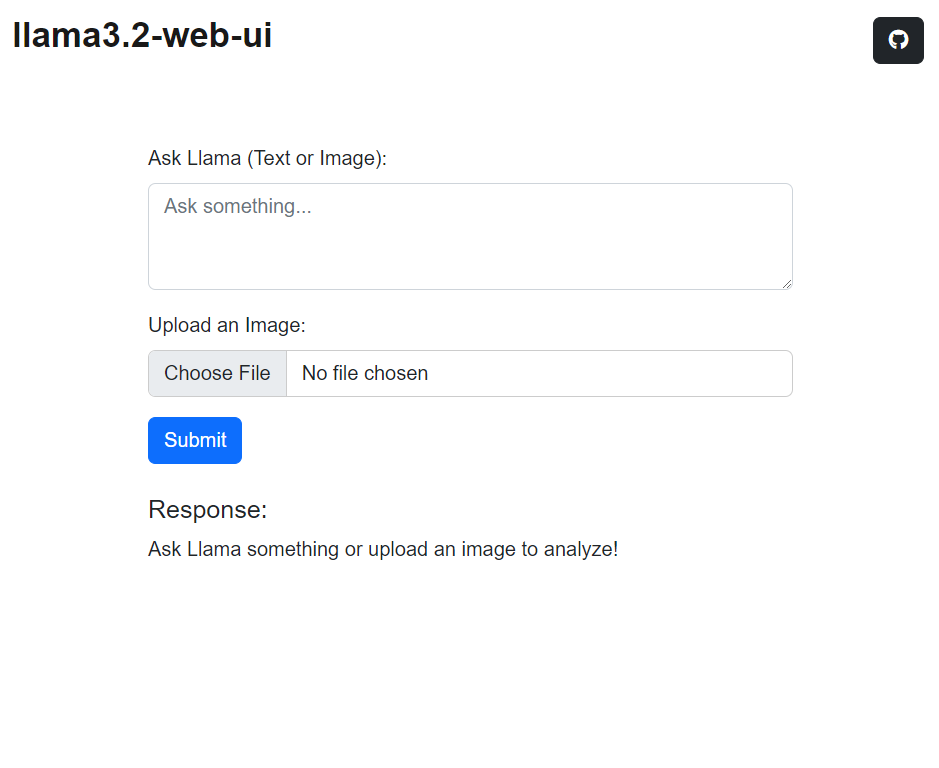

1. Interacting with the UI

Once the interface loads in your browser, you can:

- Submit Text Inputs: Type your question or prompt in the input box and hit Submit.

- Upload Images (Coming soon): You will soon be able to upload images to receive both text and visual-based responses from the model.

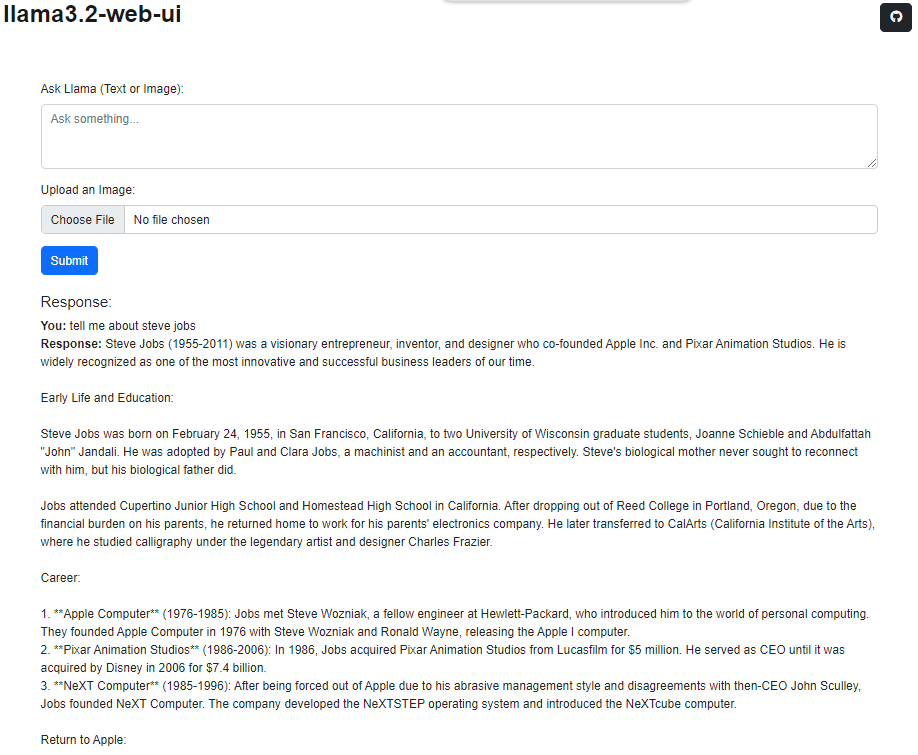

2. Viewing Responses

- Formatted Text: Responses are displayed in a clean, readable format.

- Highlighted Code: Any code snippets returned are syntax-highlighted for better readability.

- Multimodal Responses: When image inputs are supported, you will also receive generated images, annotations, or detailed content explanations.

Screenshots

Contributing

Contributions are always welcome.

License

This project is licensed under the MIT License. See the LICENSE file for more details.

This project is maintained on GitHub. For more information, visit the LLaMa 3.2 Multimodal Web UI GitHub repository.

Enjoy Reading This Article?

Here are some more articles you might like to read next: