DeepSeek WebUI Installation using Ollama in Nvidia Jetson Nano

This blog shows you how to set up DeepSeek-R1, a powerful local reasoning model, using Ollama and Open WebUI interface simillar to ChatGPT.

Prerequisites

- Python 3.11 or higher

- Conda package manager

- Admin/sudo privileges for installation

- 8GB RAM minimum (16GB+ recommended)

- Note: The demo is tested in Linux/Ubuntu OS

Step-by-Step Installation Guide

1. Create and Activate Conda Environment

First, set up a dedicated virtual environment using anaconda:

conda create -n webui python=3.11 -y && conda activate webui

2. Install Ollama

Ollama is a lightweight model server that manages and runs AI models locally. Choose your operating system below for installation instructions:

Linux

curl -fsSL https://ollama.com/install.sh | sh

# Verify installation

ollama --version

macOS

- Download the latest version from Ollama for macOS

- Open the downloaded .dmg file

- Drag Ollama to your Applications folder

- Launch Ollama from Applications

Windows

- Download the installer from Ollama for Windows

- Run the downloaded .exe file

- Follow the installation wizard

- Launch Ollama from the Start menu

3. Install Open WebUI

Set up the web interface using open-webui:

pip install open-webui

4. Install DeepSeek Models using Ollama

Choose your preferred model size:

# DeepSeek-R1-Distill-Qwen-1.5B

ollama run deepseek-r1:1.5b

You can install other DeepSeek models using Ollama.

# DeepSeek-R1

ollama run deepseek-r1:671b

# DeepSeek-R1-Distill-Qwen-7B

ollama run deepseek-r1:7b

# DeepSeek-R1-Distill-Llama-8B

ollama run deepseek-r1:8b

# DeepSeek-R1-Distill-Qwen-14B

ollama run deepseek-r1:14b

# DeepSeek-R1-Distill-Qwen-32B

ollama run deepseek-r1:32b

# DeepSeek-R1-Distill-Llama-70B

ollama run deepseek-r1:70b

5. Launch the Web Interface

Start the WebUI server:

open-webui serve

6. Access the Interface

- Open your browser

- Navigate to

http://localhost:8080

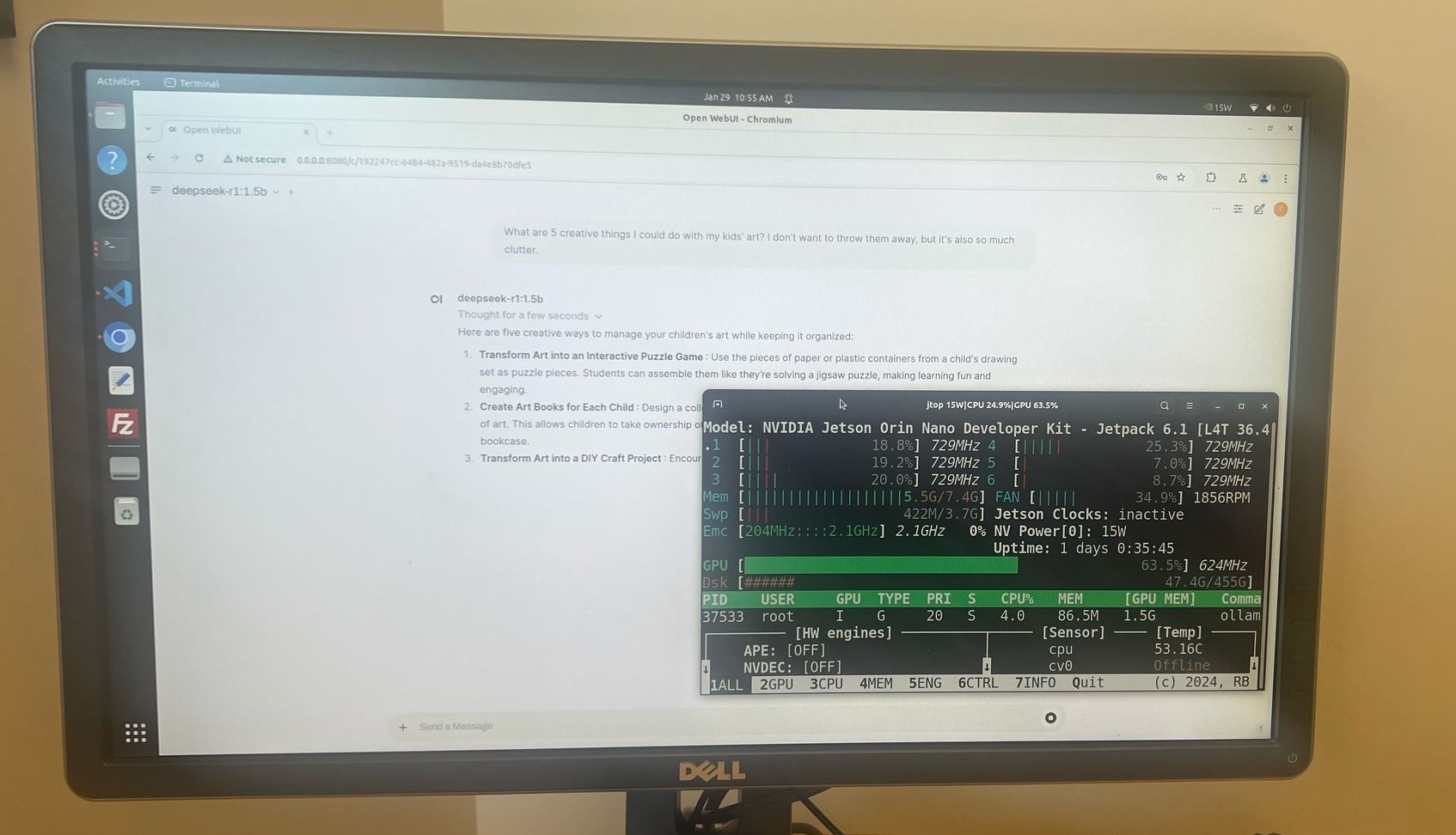

Demo

After installation, access the WebUI through your browser and start interacting with DeepSeek-R1.

Nvidia Jetson Nano

DeepSeek-R1 works on edge devices like the Nvidia Jetson Nano, using only 8 GB of RAM. I tested it with 1.5b parameters, and it runs smoothly.

Enjoy Reading This Article?

Here are some more articles you might like to read next: